Unsupervised Visual Learning: An Empirical Study

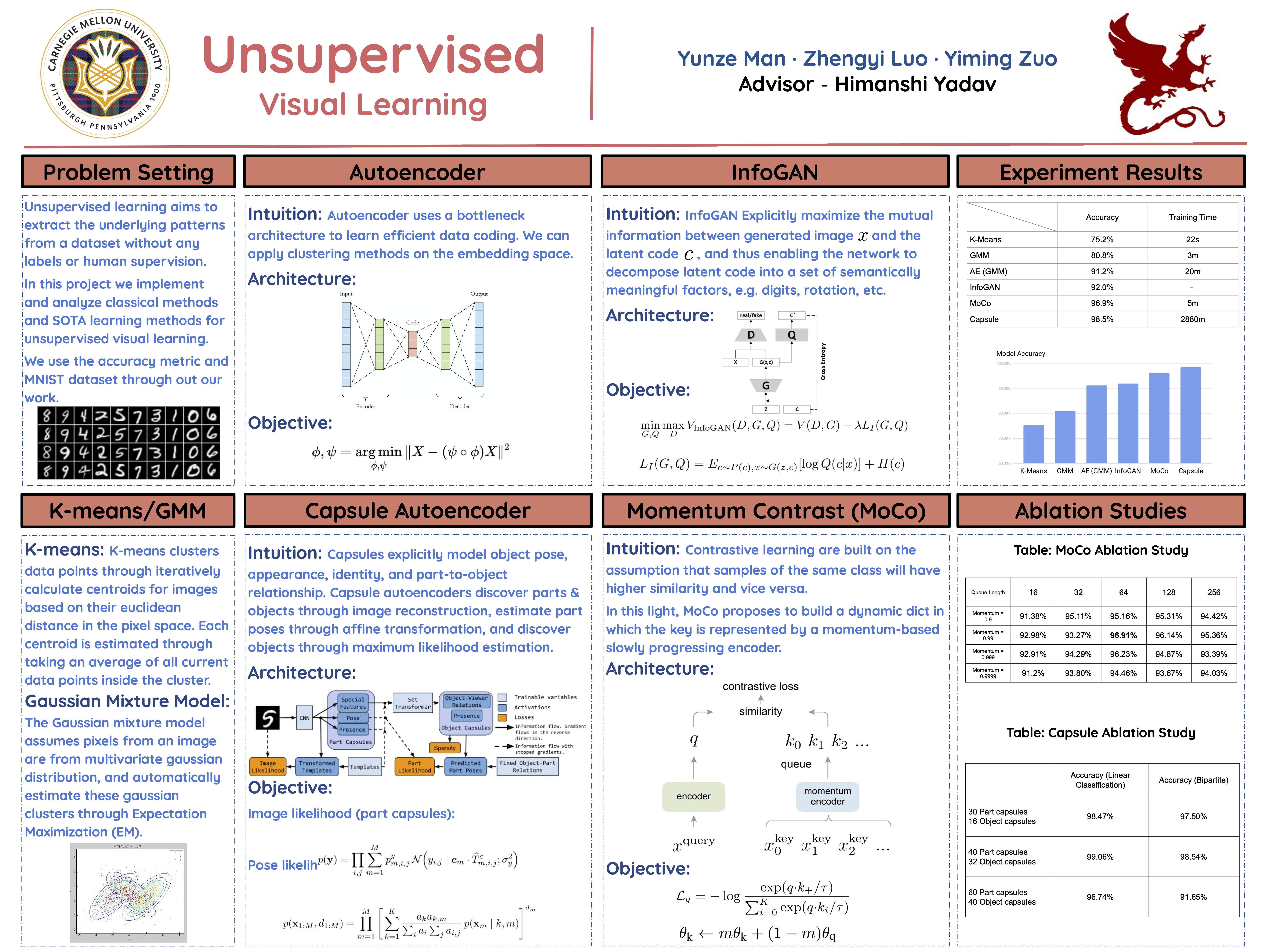

Unsupervised learning is a fundamental and essential sub-field of machine learning that has attracted significant attention. In this study, we focus on unsupervised visual learning, where the center problem can be formulated as: how do we learn underlying patterns from image data that we can classify visual entities without using labels. Despite the the popularity of supervised learning, it requires large, clean, and carefully handcrafted datasets and unsupervised learning is becoming increasingly appealing due to its ability to learn form massive amount of unlabeled data. Traditional methods such as K-means and Gaussian Mixture Models (GMM) have shown promising unsupervised classification performance on benchmark datasets like MNIST. In recent years, benefiting from the development of deep learning and ever-growing availability of computational resources, more advanced unsupervised learning methods such as InfoGAN, Capsule Networks and MoCo are poposed.

What are the intuition behind these methods? Do they perform better compared to traditional methods and are they sensitive to the choice of hyper-parameters? Are they computationally expensive and affordable? In this study, we thoroughly analyze the pros and cons of classicial and state-of-the-art methods and try to answer these questions.

[This is my final project for 10701 Machine Learning at CMU, which is marked 100/100!]